ElasticSearch is a JSON database popular with log processing systems. For example, organizations often use ElasticSearch with logstash or filebeat to send web server logs, Windows events, Linux syslogs, and other data there. Then they use the Kibana web interface to query log events. All of this is important for cybersecurity, operations, etc.

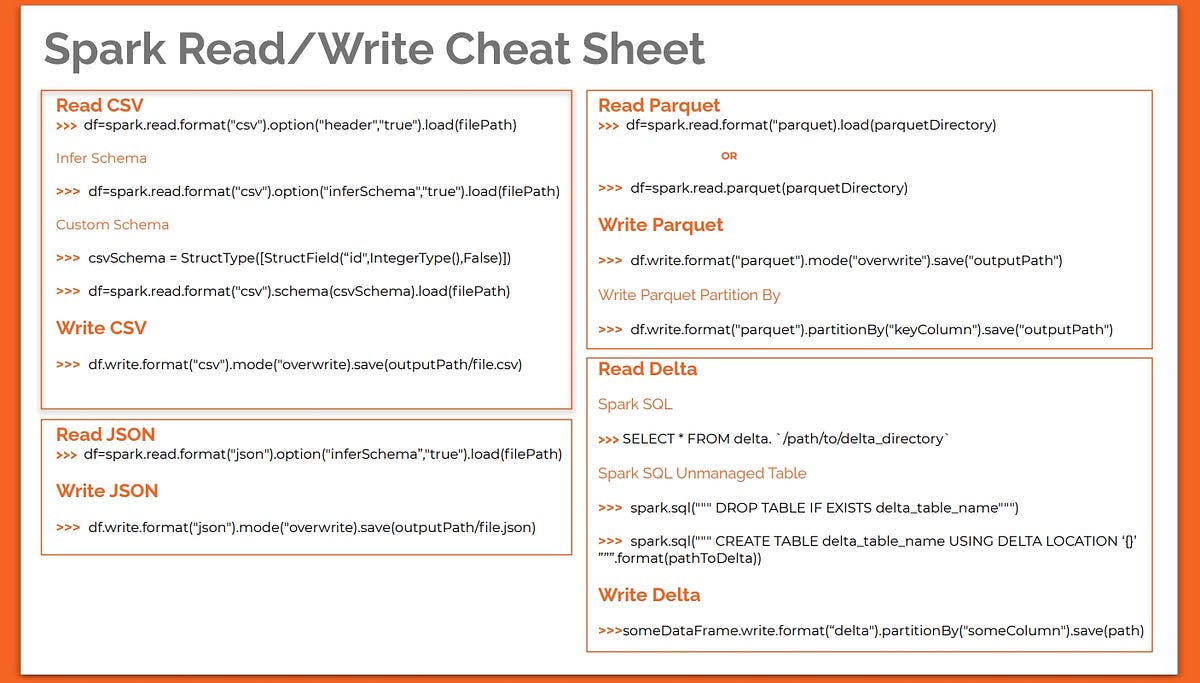

- Spark Scala Commands Cheat Sheet

- Spark Commands Pdf

- Spark Commands Cheat Sheet Download

- Spark Cheat Sheet

- Most Commonly used RSC commands (Cheat sheet) M8000 / M9000: How to update XCP firmware (XSCF firmware upgrade) How to set boot-device with luxadm command in Solaris; M-Series Servers: How to reset XSCF password; Complete Hardware Reference: SPARC T3-1 / T3-2 / T3-4; How to set OBP Variables from the ALOM/ILOM.

- PySpark SQL Cheat Sheet. GitHub Gist: instantly share code, notes, and snippets.

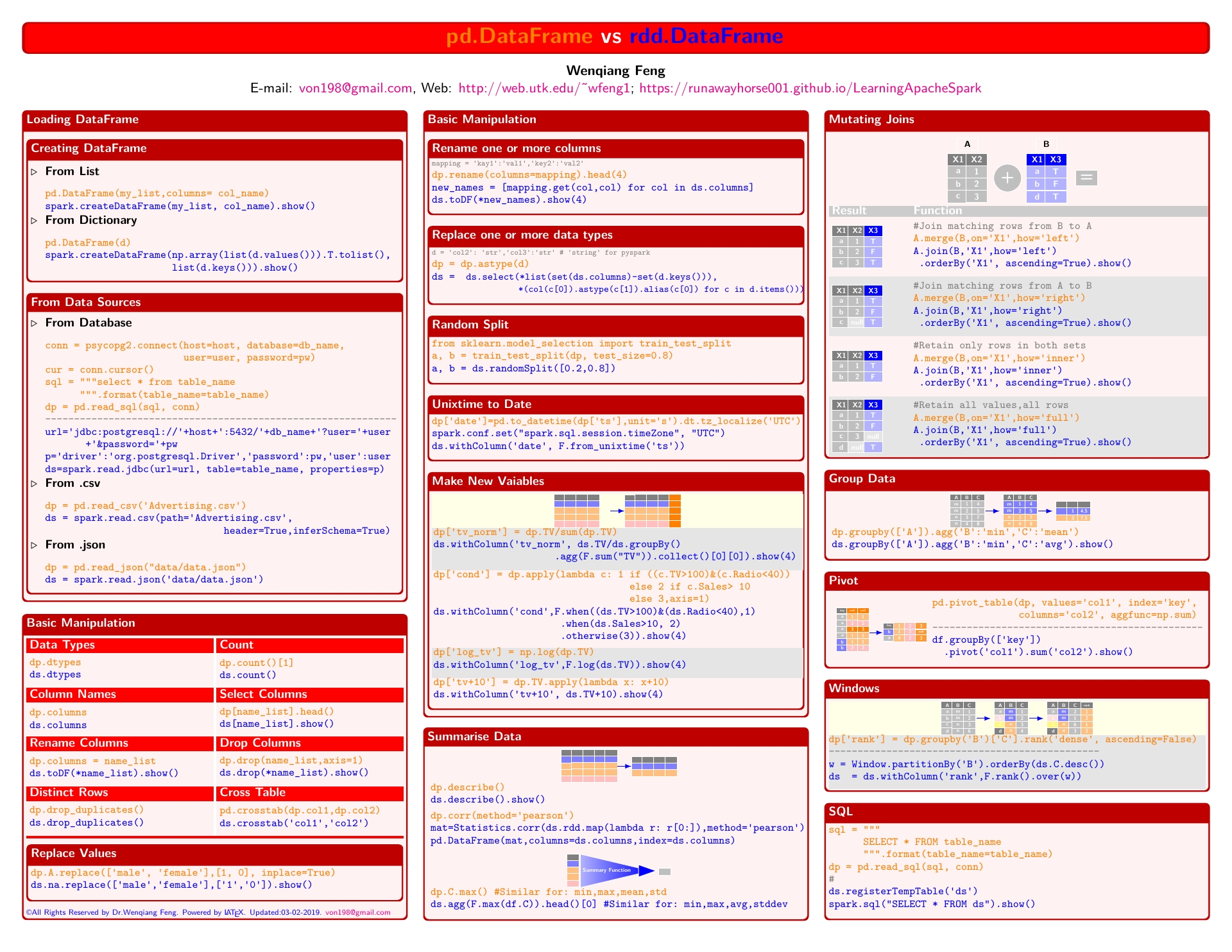

Spark command is a revolutionary and versatile big data engine, which can work for batch processing, real-time processing, caching data etc. Spark has a rich set of Machine Learning libraries that can enable data scientists and analytical organizations to build strong, interactive and speedy applications.

Now, since Spark 2.1, Spark has included native ElasticSearch support, which they call Elasticsearch Hadoop. That means you can use Apache Pig and Hive to work with JSON documents ElasticSearch. ElasticSearch Spark is a connector that existed before 2.1 and is still supported. Here we show how to use ElasticSearch Spark.

These connectors means you can run analytics against ElasticSearch data. ElasticSearch by itself only supports Lucene Queries, meaning natural language queries. So you could write predictive and classification models to flag cybersecurity events or do other analysis, something that ES does not do by itself.

(This article is part of our ElasticSearch Guide. Use the right-hand menu to navigate.)

Installation ElasticSearch Spark

First you need ElasticSearch. Download a prebuilt version instead of using the Docker image. The downloaded version has authentication turned off, which saves us some steps.

And then you need Apache Spark. Download that from here and unzip it.

Neither software requires configuration. And it is not necessary to start Spark to use it. Nor do you need to start Hadoop. You only need to start ElasticSearch. Run this command as a non-root user to do that:

Spark Scala Commands Cheat Sheet

Then download the ElasticSearch jar file:

Now start the interactive Spark shell, supplying the jar file as a command line option:

Now we can take the example program right from the ElasticSearch web site and create data in ElasticSearch. Copy this text into the spark-shell.

Spark Commands Pdf

All this did was write an RDD to ElasticSearch. Now we can write a few simple lines of Scala to read that data from ES and make it an RDD again.

That will return the lines below. Note that the RDD is a Map. It could also be a Scala Case Class or JavaBean. So those are the type of objects you can store there, which makes sense since the ES format is JSON.

Spark Commands Cheat Sheet Download

Now we can query the ES index and see that the spark index is there:

Spark Cheat Sheet

Responds: